Hello, I'm Jingyuan Li

Fifth year PhD @ University of Washington

Fifth year PhD @ University of Washington

I am a PhD student at NeuroAI Lab, University of Washihngton , Seattle, advised by Prof.Eli Shlizerman. Before I join the University of Washington, I worked with Prof.Tai Sing Lee at Carnegie Mellon University (CMU).

My primary interest lies in general understanding of time-series data, particular to time-series neural signals and time-series behavior recordings.

In terms of method, I focus on deep learning based method, such as Recurrent Neural Network, Graph Neural Network and Transformer.

My research topics include Semi(Un)-supervised Learning, Representation Learning, Computational Neuroscience, Brain Computer Interface, Robust and Uncertainty Evaluation.

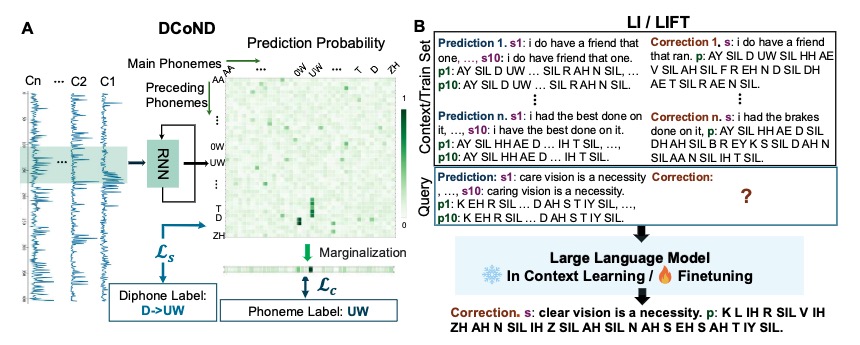

Authors: Jingyuan Li, Trung Le, Chaofei Fan, Mingfei Chen, Eli Shlizerman.

In submission to ICLR 2025

I developed DCoND-LIFT, a method that leverages large language models to translate neural signals into text, reducing the word error rate from 9.93% to 5.77% compared to the baseline.

Keywords:Brain-Computer Interface, Brain-to-Text, Large Language Model, Phoneme Decoding

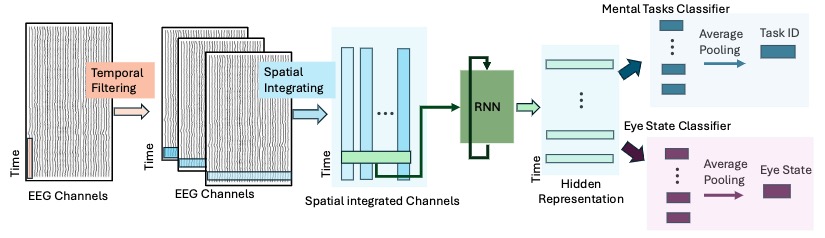

Authors: Jingyuan Li,Yansen Wang, Nie Lin, Dongsheng Li.

In submission to ICASSP 2025

Preprint

I introduced four mental tasks that map EEG recordings to 35 characters, including letters and numbers, with high accuracy, significantly improving over direct character imagination by aounrd 8.5%.

Keywords: EEG, Brain-Computer Interface, Brain-to-Character, GRU, CNN

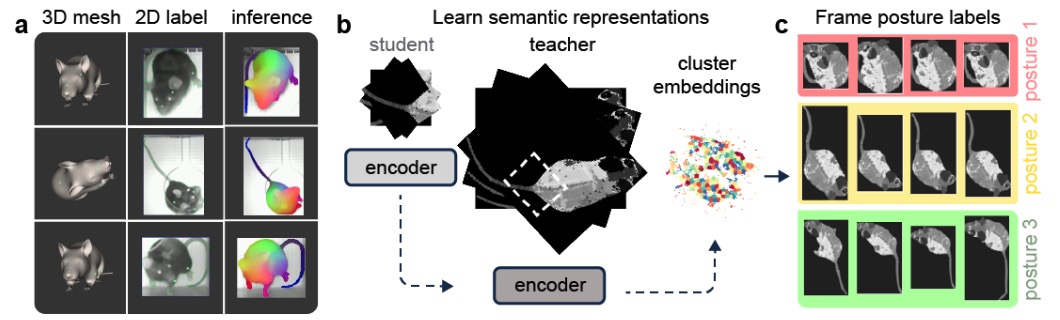

Authors: Ying Yu*, Jingyuan Li*, Kun Su, Anna Bowen, Carlos Campos. *Equal contribution.

Cosyne 2024 Oral

Talk

I presented 2D to 3D mapping of animal posture, from which behavior segment can be retrieved with one-shot example.

Keywords: Posture modeling, Behavior segmentation

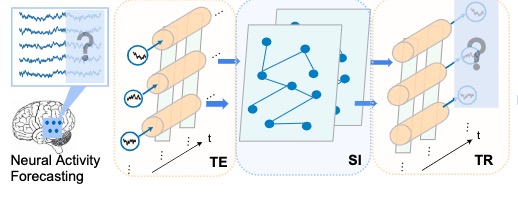

Authors: Jingyuan Li, Leo Scholl, Trung Le, Pavithra Rajeswaran, Amy Orsborn, Eli Shlizerman.

NeurIPS 2023

Paper

I introduce a Graph Neural Network for predicting future neural signals, which helps to recover the underlying neural dependencies.

Keywords: intracortical recordings, Brain-Computer Interface, Graph Neural Networks

Authors: Jingyuan Li, Trung Le, Eli Shlizerman.

IEEE TNNLS, 2023

Paper

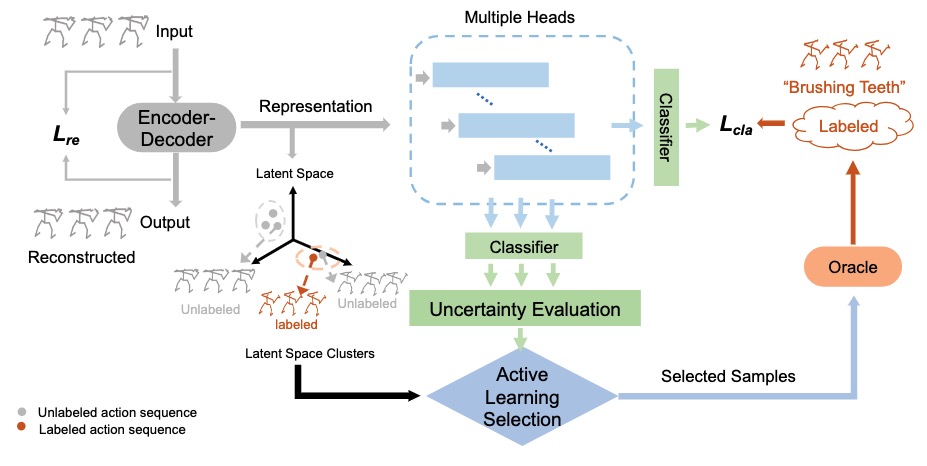

I proposed an active learning method for semi-supervised behavior recognition, achieving strong action recognition performance under the circumstances when only a limited number of annotated samples are available.

Keywords: Active Learning, semi-supervised Learning, Action Recognition

Authors: Jingyuan Li, Moishe Keselman, Eli Shlizerman.

arXiv, 2022

Preprint

GitHub

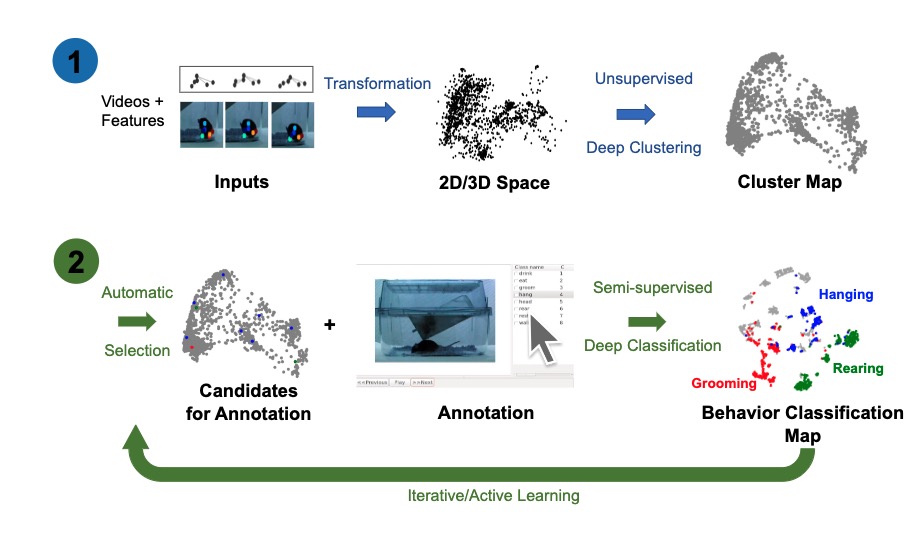

I implemented an active learning pipeline for animal behavior recognition requiring a few of labeled animal behavior examples, a GUI was introduced for general uses.

Keywords: Animal Behavior Recognition, Active Learning, Graphic User Interface